Artists create work that is interpreted by the public within a (different) context. In visual poetry this happens with white space, typography, color; in performance poetry through analog and/or digitally distorted voice (timbre, pitch, volume...), while hybrid or XR art introduces the user to predefined “experience contexts” with attention to user experience (UX) and user environment (UI : user interface). This 'human- machine-interaction' (HMI) and/or 'human-technology-interaction' (HTI) ensures that the user can enter into an engagement (interaction) with the technology used.

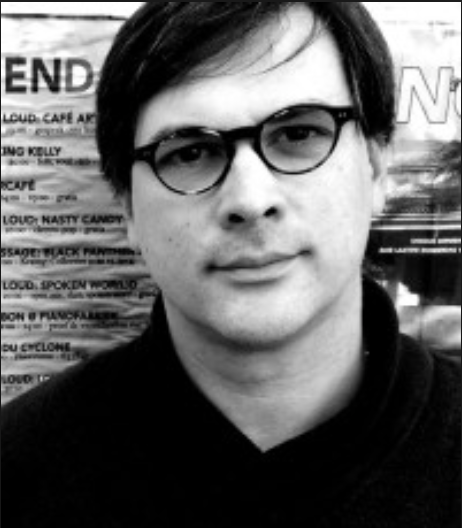

Various machine learning tools have already been developed that convert speech to text. In this research project Philip Meersman wants to find ways in which speech-to-text contributes to an immersive live visual poetry performance experience, how this can contribute to interaction between performer, audience and environment and how speech-to-text AI can interact in performance locations created for this purpose. For this he wans to visit expos, performances and events that already use these technologies. He will be mapping and testing these technologies with the aim of using different parameters, or performance variables that at the end are presented as Proof of Concept (POC) in poetry performances during, among others, the final event of the 'Urban Travel Machine' project at the Heysel planetarium, but also in the Lange Zaal during ARTICULATE 2024.